Here is the link to the study by Shroyer, Mehta, Thukral, Smiley, Mercaldo, Meyers, and Smith

Here is a link to all the ECGs.

This Week in Cardiology Podcast – August 01, 2025 — transcript here

By John M. Mandrola, MD

Disclosures August 01, 2025

AI vs Doctor ECG-Reading for Cath Lab Activation

The American Journal of Emergency Medicine has a neat study out this week comparing the accuracy of cath lab activation (CLA) for ST-elevation myocardial infarction (STEMI)-equivalent and STEMI-mimic ECGs.

I really like this study. And it’s an important problem, as the identification of STEMI in the first minutes of a person’s presentation is crucial.

The aim was to measure doctor accuracy vs the machine learning-based artificial intelligence (AI) algorithm from Queen of Hearts, which is a deep neural network model. You can use it on your phone. It determines the presence or absence of an occlusion myocardial infarction (OMI).

The PMcardio STEMI AI ECG model received FDA Breakthrough Device Designation in March 2025 but is yet to be cleared by FDA for marketing in the US.

___________

New PMcardio for Individuals App 3.0 now includes the latest Queen of Hearts model and AI explainability (blue heatmaps)! Download now for iOS or Android. https://www.powerfulmedical.com/pmcardio-individuals/ (Drs. Smith and Meyers trained the AI Model and are shareholders in Powerful Medical.

___________

Americans need to wait for FDA approval, but there’s an opportunity to get early access to the PM Cardio AI bot through a beta signup. It is available on Android and Apple app stores in the European Union and UK.

Over 2500 hospitals are on the waiting list, and it’s currently being tested in pilot programs at over 60 global centers.

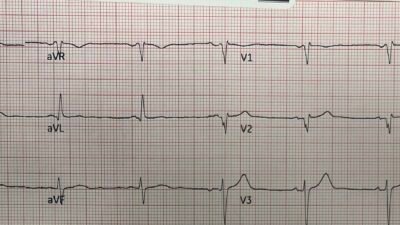

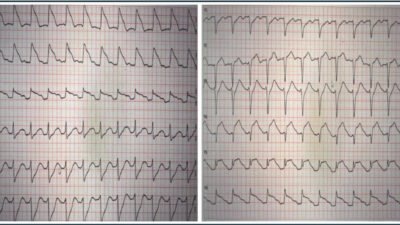

For this study, done in San Antonio, in a hospital system that has two community hospitals and four stand-alone emergency departments (EDs), the authors chose 18 tough ECGs. I know this because they are in the Supplement. And I had to really study them. These included four STEMI-equivalent types which require immediate reperfusion therapy. They added an ECG with Wellens’ T-waves and aVR STEMI. They also included transient STEMI and right bundle branch block (RBBB) with left anterior fascicular block (LAFB) OMI. Eight ECGs representing STEMI-mimics were included to test false-positive cath lab activation.

Again, my initial reaction to the study is that these could be highly selected ECGs, perhaps to accentuate doctor/AI differences. Maybe they were, but looking at them, these are real ECGs, and they are the type of ECGs that cause brain stress in reading them.

One important exclusion was ECGs seen in pericarditis, Takotsubo cardiomyopathy, and Prinzmetal angina since there are limited published criteria differentiating them from OMI.

In sum, there were 12 ECG types that warranted immediate angiography and 6 ECGs that were mimics that warranted no cath lab activation.

The ECGs were shown to 53 emergency medicine docs, 42 cardiologists, and the AI algorithm. The reference standard was angiography. Was there an OMI or not? Outcome was a binary outcome. CLA or not.

Results

Interpretation accuracies were similar between EM docs and cardiologists both were 66%.

But both were hugely lower than the AI model, which accurately called cath lab activation (CLA) in 89%.

Doctors most frequently misclassified the de Winter pattern, transient STEMI, hyperacute t-wave OMI, and bundle branch ECGs

The Queen of Hearts AI algorithm misclassified only two ECG types: left bundle branch block OMI (Sgarbossa (+) LBBB*) and left ventricular aneurysms. These same ECG types also challenged physicians, with only 14 % and 58 % of physicians correctly interpreting them, respectively.

Finally, EM docs missed 41 % of true OMIs (195/477) and overcalled 32 % of non-OMIs (133/415), whereas Queen of Hearts AI missed only 11 % and overcalled 11 %.

Summary Key Findings

Overall physician accuracy was low (66 %), consistent with prior studies reporting 70% accuracy using fewer ambiguous ECGs.

There were nearly identical accuracies between EM doctors and cardiologists (65.6% and 65.5%, respectively; P = .969).

The ECG types most frequently misinterpreted include LBBB (±OMI), transient STEMI, and hyperacute T-waves as well as de Winter T-waves

The Queen of Hearts AI algorithm was more accurate than physicians (89% vs. 66%, P < .001), correctly classifying all ECGs except left ventricular (LV) aneurysm and LBBB with OMI, indicating potential to improve care and resource utilization.

Comments

I find this a remarkable study. The AI is clearly better. The ECGs were hard, but they are real, and I’ve seen them reviewed in peer review meetings as missed STEMI.

No one misses the 3-4 mm tombstones ST elevations. It’s the subtle STEMI mimics that are tough. If you are a patient with an occluded left anterior descending (LAD) artery but not a conclusive ECG, you hope either for a) luck or b) a master ECG reader, or c) a really good AI algorithm.

Scientifically, I wonder if the best solution is smart doctors who have seen the patient and have Bayesian priors based on history and general appearance (MIs often look like MIs from the door) plus AI vs just AI. It’s a false comparison because I don’t think that study will ever be done, as it’s hard for me to envision an emergency room without a doctor. (But I could not have imagined medicine with smartphones before smartphones).

Nonetheless, I have no idea why the FDA would not approve such a device for use. It looks like an important adjunct for getting to the proper diagnosis. I see it as similar to point-of-care ultrasound for central venous access. Sure. You get into a central vein without ultrasound, but why would you?

In the case of ECGs and CLA, sure, you can do it without AI, but why would you? The STEMI equivalents and mimics aren’t rare and the Queen of Hearts looks quite good.

Technology is amazing.

=====================================

MY Comment, by KEN GRAUER, MD (8/3/2025):

This is an important post in Dr. Smith’s ECG Blog — as the article by Shroyer et al contributes yet one more supportive study of the positive influence that AI provides to the CLA (Cath Lab Activation) decision-making process, as compared to ECG interpretation solely by experienced clinicians (ie, the 53 emergency physicians and 42 cardiologists who participated in Shroyer’s study).

- The above said — I believe a KEY Question remaining is, “How BEST to use the data provided by this study?”.

- Implicit within this KEY Question is the sub-Question, “How BEST to utilize the expertise that this AI app clearly provides?”.

That the PMcardio for Individuals App 3.0 ( = the latest Queen-Of-Hearts model, with AI Explainability) clearly does provide expertise for CLA decision-making — should be obvious from the manner in which the AI QOH app has been developed (namely through input provided by Drs. Smith and Meyers — from an ever-expanding data source based on thousands of actual ECGs).

- Validity of this data source is meticulously verified by cath lab documentation aimed at answering the KEY clinical question of, “Should the cath lab be activated for this patient based on this history and this ECG?”.

- Attestation to the qualifications of Drs. Smith and Meyers for engineering the teaching of the AI QOH app — is the amazing library of nearly 2,000 clinical cases accumulated over the past 17 years on Dr. Smith’s ECG Blog.

- To Emphasize: The AI QOH app continues to get better, as its data base is ever expanding with refinements in AI interpretation integrated into each new version of the application that comes out.

The 18 ECGs in Shroyer’s Study:

As per Dr. John Mandrola’s 6-minute commentary (that begins at 5:45 in the above cited Audio presentation) — the 18 ECGs that form the basis of Shroyer’s study “were hard” — and — “I really had to study them” — but — “these are real ECGs, and they are the type of ECGs that cause brain stress in reading them”.

MY Suggestion: I encourage all readers of this ECG Blog to review these 18 ECGs yourself — and then compare your answers to the QOH answers. I guarantee this will be a highly insightful use of your time — as you then compare your answers with QOH answers, and then gain enlightenment from cardiac cath results and from clinical disposition of each case.

MY Thoughts:

Having taught ECG interpretation for 40+ years to medical students, residents, paramedical personnel, and especially to physicians of all medical specialties locally, nationally, and now on an international basis — I remain an optimist.

- I believe clinicians whatever their medical training is can be taught to improve their recognition of acute ECG patterns — and thereby improve their ability to more time-efficiently recognize the need to activate (or not activate) the cath lab.

Caveat #1: The 18 ECGs that make up this study provide but a glimpse of what we regularly see from clinical cases sent to us from clinicians of all experience levels from around the world.

- To those of us who regularly scrutinize the gamut of emergency ECG tracings — many of the 18 tracings in the Shroyer study are straightforward, yet fully 1/3 of the 95 emergency physicians and cardiologists who reviewed these tracings misclassified them.

- That said — I strongly suspect the “accuracy” of study participants would have been even lower had there been a greater percentage of subtle OMI+/STEMI- tracings.

Caveat #2: Our current medical literature data is all-too-often flawed. As a result — the self-fulfilling prophecy in support of the outdated and clinically inferior STEMI paradigm is maintained (Evidence in favor of OMI Paradigm superiority is easily accessible from the Research & Resources Menu at the top of every page in Dr. Smith’s ECG Blog — under OMI Literature Timeline and OMI Facts & References).

- As an example of this self-fulfilling prophecy — We have published numerous cases on Dr. Smith’s ECG Blog of acute OMIs that do not qualify as a STEMI with the required millimeter-based amount of ST elevation on the initial or repeat ECGs. Eventually, some of these OMI+ tracings do qualify as a “STEMI” (ie, sufficient ST segment elevation eventually does occur — albeit hours, or even 1-2 days after the time that the initial ECG was OMI+).

- If the interventionist who had been refusing to cath a given patient with an OMI+/STEMI- ECG, now “immediately” caths this patient when one of the repeat ECGs finally satisfies STEMI criteria — What goes into the data base?

- What goes into the data base is that, “the interventionist activated the cath lab ‘immediately’ (ie, within minutes as soon as STEMI criteria were satisfied) — albeit without appreciation that PCI done 3-to-10 (or-more) hours after an OMI+ initial ECG is unlikely to provide significant benefit. But the flawed STEMI paradigm stays “cemented” in the mindset of those married to this outdated concept.

Caveat #3: As good as this study by Shroyer et al is — design of the “perfect study” remains an elusive goal.

- Sometimes the “correct answer” for a given patient with a given ECG may be that prompt cath is indicated because there is enough suspicion of an ongoing coronary occlusion to warrant a definitive answer.

- Sometimes it is better to rule out an acute OMI, rather than “sit on” a case for hours with indecision.

- And sometimes … “Ya just gotta be there!”. These situations in Caveat #3 are difficult to “test” for.

MY Approach to the Problem:

I believe the BEST approach for optimizing the expertise of the AI QOH app — is to combine it with clinician decision-making.

- As good as the AI QOH app is — it is not perfect. Thanks to the ongoing efforts of Drs. Smith and Meyers with Powerful Medical — mistakes/oversights that earlier versions of QOH made have been corrected. But this remains an ongoing process. Clinician judgment remains important.

- Clinicians learn best IF they force themselves to interpret each ECG they encounter before they look at the QOH interpretation. Much can be learned from AI explainability that provides a percentage probability prediction for each of the 12 leads that prove influential in AI decision-making.

- If you as the clinician find your interpretation is consistent with QOH — then 1 + 1 =5, and your level of confidence in your ECG interpretations is increased (which increases the likelihood of a correct decision for the patient!).

- In contrast, if there is a discrepancy between clinician and AI interpretations — It’s back to the “drawing board” to try to figure out why — all-the-while remembering that QOH is up to 90% accurate in assessing ECGs for the likelihood of an acute OMI (whereas most clinicians are not nearly as good). In such cases — AI explainability offers an optimal opportunity to learn why QOH answered different than you did.

- Do your best to follow-up on all of your cases that you possibly can! This is the only way to optimally learn. It may be that at times both you and QOH may be “wrong”. Following up on such cases (ideally finding out not only about the cath report — but also on what happened to the patient) is the way that all of us learn.

- Develop a channel to get expert opinions as you need them (ie, from clinicians who are truly savvy in recognizing OMI+/STEMI- tracings). If unable to access expert opinion at the time you are seeing the patient — then do so afterward, by making screen shots of key serial tracings with notation of key test results, and ideally with correlation of symptom severity at the time each ECG is recorded (I recommend writing on each actual ECG on a scale from 1-to-10, how severe the patient’s chest pain is at the time that ECG is recorded). In 2025 — there are no excuses. The internet makes access to an expert interpreter accessible to all.

- Finally — Stay tuned to Dr. Smith’s ECG Blog for an never ending supply of new and challenging clinical cases.